The Hidden Dangers of AI Multi-Channel Platforms: A Security Deep Dive

As artificial intelligence systems become increasingly sophisticated and interconnected, Multi-Channel Platforms (MCPs) are emerging as the backbone of modern AI-driven workflows. These platforms orchestrate complex interactions between AI agents, external tools, APIs, and communication channels, creating powerful automation capabilities that can transform business operations. However, with this power comes a new and evolving threat landscape that security professionals must understand and address.

The integration of AI agents with multiple tools and services creates unique attack vectors that blend traditional cybersecurity vulnerabilities with novel AI-specific risks. Unlike conventional applications, MCPs operate in a dynamic environment where natural language processing, automated decision-making, and cross-platform integrations create unprecedented security challenges.

Understanding the MCP Threat Landscape

Multi-Channel Platforms represent a convergence of several technologies: large language models (LLMs), API orchestration, real-time communication protocols, and tool integration frameworks. This convergence creates a complex attack surface where vulnerabilities can manifest in unexpected ways, often bypassing traditional security controls designed for more predictable systems.

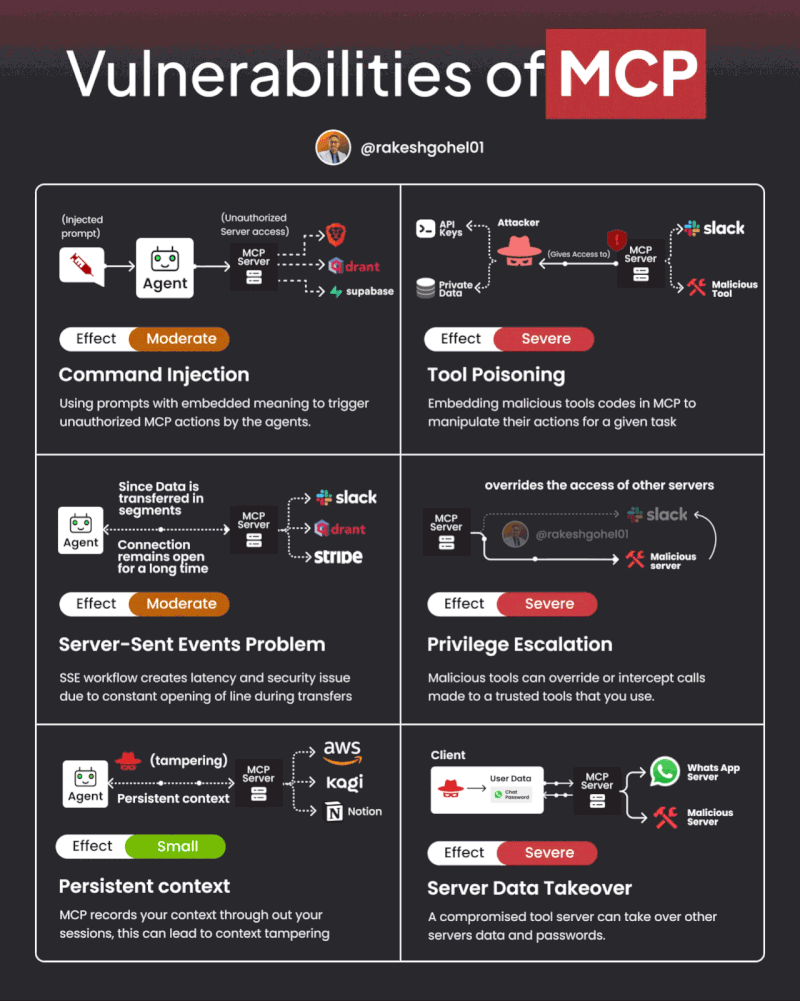

The security risks associated with MCPs fall into six primary categories, each with distinct characteristics and potential impact levels. Understanding these risks is crucial for organizations looking to harness the power of AI while maintaining robust security postures.

1. Command Injection Through Agent Prompts

Risk Level: Moderate | Attack Vector: Prompt Manipulation

One of the most insidious threats to MCP security involves the manipulation of agent prompts to execute unauthorized commands. Unlike traditional command injection attacks that exploit code vulnerabilities, this attack vector leverages the natural language processing capabilities of AI agents.

How It Works

Attackers craft malicious inputs that, when processed by an AI agent, cause the system to perform unintended actions. These inputs can be embedded in:

- User queries and requests

- External data sources that the agent processes

- Inter-tool communication messages

- File uploads or document processing workflows

Real-World Impact

Consider a customer service AI agent integrated with multiple backend systems. An attacker could submit a seemingly innocent support request that contains hidden instructions, causing the agent to:

- Access customer data beyond the scope of the request

- Modify system configurations

- Trigger unauthorized API calls to external services

- Leak sensitive information through response manipulation

Defense Strategies

Input Validation and Sanitization: Implement robust filtering mechanisms that analyze incoming prompts for suspicious patterns and potentially malicious instructions.

Context Boundary Enforcement: Establish clear operational boundaries for AI agents, limiting their ability to perform actions outside their designated scope.

Prompt Security Frameworks: Deploy specialized tools designed to detect and neutralize prompt injection attempts before they reach the agent processing layer.

2. Tool Poisoning: The Supply Chain Attack of AI

Risk Level: Severe | Attack Vector: Malicious Tool Integration

Tool poisoning represents one of the most dangerous threats to MCP environments. This attack involves the injection of malicious code or logic into tools that integrate with the platform, creating a persistent backdoor that can compromise the entire ecosystem.

The Anatomy of Tool Poisoning

MCPs rely on a diverse ecosystem of tools and integrations to extend their capabilities. These tools might include:

- Database connectors and query engines

- File processing utilities

- External API clients

- Communication interfaces

- Data transformation tools

When an attacker successfully compromises one of these tools, they gain the ability to:

- Intercept and modify data flows between the agent and external systems

- Harvest authentication credentials and API keys

- Manipulate agent behavior through poisoned responses

- Establish persistent access to the MCP environment

Case Study: The Compromised Analytics Tool

Imagine an analytics tool integrated with an MCP that processes financial data. If compromised, this tool could:

- Silently exfiltrate sensitive financial information

- Manipulate analysis results to influence business decisions

- Inject false data that propagates throughout the organization's reporting systems

- Use its trusted status to access additional systems and data sources

Mitigation Approaches

Tool Vetting and Code Review: Establish rigorous security assessment processes for all tools before integration, including source code analysis and security testing.

Sandboxed Execution: Deploy tools in isolated environments with limited system access and network connectivity.

Behavioral Monitoring: Implement comprehensive logging and anomaly detection systems that can identify unusual tool behavior patterns.

Supply Chain Security: Maintain detailed inventories of all integrated tools and monitor them for security updates and vulnerability disclosures.

3. Server-Sent Events: The Persistent Connection Problem

Risk Level: Moderate | Attack Vector: Protocol Exploitation

Many MCPs utilize Server-Sent Events (SSE) to maintain real-time communication between agents and external systems. While SSE provides efficient data streaming capabilities, it also creates persistent connections that can be exploited by attackers.

Vulnerability Characteristics

SSE connections are particularly vulnerable because they:

- Maintain long-lived connections that increase exposure windows

- Transfer data in segments over extended periods

- Often lack robust authentication for ongoing sessions

- May not implement proper connection lifecycle management

Attack Scenarios

Connection Hijacking: Attackers who gain access to SSE endpoints can intercept or manipulate data streams, potentially altering agent behavior in real-time.

Resource Exhaustion: Malicious actors can establish numerous persistent connections to overwhelm system resources and create denial-of-service conditions.

Data Leakage: Poorly secured SSE streams can inadvertently expose sensitive information through side-channel attacks or connection eavesdropping.

Security Controls

Connection Management: Implement strict timeout policies and idle connection handling to minimize exposure windows.

Encrypted Channels: Use robust encryption for all SSE communications, particularly when transmitting sensitive data.

Authentication and Authorization: Deploy token-based authentication systems that regularly validate connection legitimacy.

4. Privilege Escalation Across the Tool Ecosystem

Risk Level: Severe | Attack Vector: Trust Relationship Exploitation

The interconnected nature of MCP environments creates complex trust relationships between different tools and services. When one component is compromised, attackers can leverage these trust relationships to escalate privileges and gain unauthorized access to additional systems.

The Cascade Effect

In a typical MCP environment, tools and services often trust each other implicitly. This trust can be exploited through:

- Service Impersonation: Compromised tools can masquerade as legitimate services to access restricted resources

- Token Theft: Authentication credentials can be harvested and reused across multiple systems

- Trust Chain Exploitation: Attackers can follow trust relationships to gain access to increasingly sensitive systems

Critical Risk Factors

Shared Authentication: Many MCPs use shared authentication tokens or service accounts across multiple tools, creating single points of failure.

Over-Privileged Tools: Tools often receive broader permissions than necessary, increasing the potential impact of a compromise.

Weak Inter-Service Communication: Insufficient authentication and authorization controls between services create opportunities for lateral movement.

Defense in Depth

Zero Trust Architecture: Implement comprehensive verification for all inter-service communications, regardless of source.

Principle of Least Privilege: Assign minimal necessary permissions to each tool and service, regularly reviewing and adjusting access rights.

Micro-Segmentation: Isolate high-privilege tools and services in dedicated network segments with strict access controls.

5. Persistent Context Tampering: The Memory Problem

Risk Level: Small | Attack Vector: Context Manipulation

AI agents often maintain contextual memory across sessions to provide consistent and relevant responses. This persistent context, while enhancing user experience, creates opportunities for subtle manipulation attacks that can alter agent behavior over time.

How Context Tampering Works

Attackers can influence agent behavior by:

- Injecting false information into conversation history

- Manipulating session state data

- Corrupting stored user preferences and settings

- Introducing bias through carefully crafted interaction patterns

Long-Term Impact

While individual context tampering attacks may seem minor, their cumulative effect can be significant:

- Gradual degradation of agent reliability

- Introduction of systematic bias in decision-making

- Compromise of user trust and confidence

- Potential for data corruption across multiple sessions

Protective Measures

Context Hygiene: Implement regular context clearing and sanitization processes, particularly between different users or sensitive operations.

Integrity Verification: Use cryptographic techniques to ensure context data hasn't been tampered with between sessions.

User Control: Provide users with visibility into and control over their agent's memory and context data.

6. Server Data Takeover: The Ultimate Compromise

Risk Level: Severe | Attack Vector: Infrastructure Compromise

The most severe threat to MCP environments involves the complete compromise of tool servers or platform infrastructure. This type of attack can provide attackers with comprehensive access to organizational data and systems.

Attack Progression

A successful server takeover typically follows this pattern:

- Initial Compromise: Exploitation of vulnerabilities in tool servers or platform components

- Credential Harvesting: Collection of authentication tokens, API keys, and service credentials

- Lateral Movement: Use of harvested credentials to access additional systems and data sources

- Data Exfiltration: Large-scale theft of sensitive organizational information

- Persistent Access: Establishment of backdoors for ongoing access

Organizational Impact

The consequences of a server data takeover can be catastrophic:

- Complete exposure of sensitive business data

- Compromise of customer information and privacy

- Disruption of critical business operations

- Regulatory compliance violations

- Significant financial and reputational damage

Enterprise Defense Strategies

Infrastructure Hardening: Implement comprehensive security controls for all server infrastructure, including regular patching, access controls, and monitoring.

Network Segmentation: Isolate MCP infrastructure from other critical systems using network-level controls and monitoring.

Incident Response Planning: Develop and regularly test incident response procedures specifically designed for MCP compromise scenarios.

Data Loss Prevention: Deploy comprehensive data protection controls that can detect and prevent unauthorized data access and exfiltration.

Building a Comprehensive MCP Security Strategy

Protecting against MCP security risks requires a multi-layered approach that addresses both traditional cybersecurity concerns and AI-specific vulnerabilities. Organizations should consider the following strategic elements:

Risk Assessment and Threat Modeling

Conduct comprehensive risk assessments that specifically address MCP-related threats. This should include:

- Mapping of all tools, services, and integrations within the MCP ecosystem

- Analysis of data flows and trust relationships

- Identification of high-risk components and attack paths

- Regular review and updating of threat models as the platform evolves

Security by Design

Integrate security considerations into every aspect of MCP development and deployment:

- Implement secure coding practices for all custom tools and integrations

- Design systems with defense in depth and zero trust principles

- Establish security requirements for third-party tools and services

- Regular security testing and validation of all platform components

Monitoring and Detection

Deploy comprehensive monitoring solutions that can detect AI-specific attacks:

- Prompt injection detection systems

- Behavioral analysis for agent and tool activities

- Real-time monitoring of inter-service communications

- Anomaly detection for unusual data access patterns

Incident Response and Recovery

Develop incident response capabilities specifically tailored to MCP environments:

- Procedures for isolating compromised tools and services

- Methods for preserving evidence from AI agent interactions

- Recovery processes that account for context and memory corruption

- Communication plans for AI-related security incidents

The Future of MCP Security

As AI technology continues to evolve, so too will the security challenges associated with Multi-Channel Platforms. Organizations must stay ahead of emerging threats by:

- Investing in AI Security Research: Support and participate in research initiatives focused on AI security and safety

- Building Security Expertise: Develop internal capabilities for understanding and addressing AI-specific security risks

- Collaborating with the Security Community: Share threat intelligence and best practices with other organizations facing similar challenges

- Preparing for Regulatory Changes: Anticipate and prepare for evolving regulatory requirements related to AI security and privacy

Emerging Threats: Nine Additional MCP Security Risks

As the Model Context Protocol ecosystem continues to evolve and mature, security researchers have identified several additional attack vectors that extend beyond the initial six vulnerability categories. These emerging threats highlight the dynamic nature of MCP security challenges and underscore the need for continuous vigilance as adoption accelerates.

Recent analysis by security firms and academic researchers reveals that 43% of MCP servers contain injection flaws, while the rapid deployment of AI systems has outpaced the development of corresponding security controls. This section explores nine critical additional risks that organizations must address to maintain comprehensive MCP security.

7. Cross-Server Tool Shadowing and Cross-Tool Contamination

Risk Level: High | Attack Vector: Inter-Service Manipulation

One of the most sophisticated threats to emerge involves malicious MCP servers that can interfere with or override legitimate tools through a technique known as cross-server tool shadowing. This attack allows a compromised or malicious server to intercept calls intended for trusted tools, creating a man-in-the-middle scenario within the MCP ecosystem.

Attack Mechanics

In cross-server tool shadowing attacks, malicious servers register tools with names or descriptions that closely match legitimate services. When an AI agent attempts to invoke a trusted tool, the malicious server can:

- Intercept Tool Calls: Redirect requests meant for legitimate tools to malicious implementations

- Data Harvesting: Capture sensitive information intended for trusted services

- Response Manipulation: Alter or poison responses before they reach the AI agent

- Stealth Operations: Operate undetected by mimicking expected tool behavior

Cross-Tool Contamination Effects

The contamination can spread across the entire tool ecosystem through:

- Behavioral Influence: Malicious tools instructing agents on how other tools should be used

- Context Poisoning: Injecting false information that affects subsequent tool interactions

- Trust Chain Corruption: Undermining the reliability of tool recommendations and selections

Mitigation Strategies

Tool Registry Verification: Implement cryptographic signing and verification for all tool registrations to ensure authenticity.

Namespace Isolation: Create strict namespacing protocols that prevent tool name conflicts and impersonation.

Behavioral Monitoring: Deploy real-time monitoring systems that can detect unusual patterns in tool interactions and response characteristics.

8. Supply Chain Attacks via Unofficial Registries

Risk Level: Severe | Attack Vector: Distribution Channel Compromise

The absence of official MCP repositories creates significant opportunities for supply chain attacks. Threat actors can distribute malicious MCP servers through unofficial channels, leveraging the trust and convenience that developers expect from package management systems.

The Unofficial Registry Problem

Without standardized, security-audited repositories, the MCP ecosystem currently relies on:

- Unverified Package Repositories: Public registries without security screening processes

- Direct GitHub Distributions: Individual repositories that may lack security oversight

- Community-Driven Collections: Informal tool sharing that bypasses traditional vetting

Attack Scenarios

Brand Impersonation: Malicious actors create MCP servers with legitimate company branding and icons to deceive users into trusting and installing compromised tools.

Typosquatting: Registering server names that closely resemble popular legitimate tools, exploiting developer typing errors during installation.

Dependency Confusion: Exploiting naming conflicts between private and public repositories to inject malicious packages into development workflows.

Installation Simplicity Increases Risk

The ease of MCP server installation—often requiring only a simple pip install command—means that security verification steps are frequently bypassed in favor of development speed.

Protection Measures

Vendor Verification: Establish processes for verifying the legitimacy of MCP server publishers before installation.

Code Review Requirements: Implement mandatory security reviews for all third-party MCP integrations.

Sandboxed Testing: Deploy new MCP servers in isolated environments before integrating them into production systems.

9. Rug Pull Attacks and Silent Definition Mutations

Risk Level: Moderate | Attack Vector: Post-Installation Compromise

Rug pull attacks represent a particularly insidious threat where MCP tools operate legitimately for extended periods before revealing their malicious nature. This delayed activation makes detection extremely difficult and can establish deep trust before the attack is executed.

The Rug Pull Timeline

- Initial Deployment: Tool functions exactly as advertised, building user trust and adoption

- Trust Building Phase: Extended period of legitimate operation, often months

- Trigger Event: Activation based on time, usage thresholds, or external signals

- Malicious Activation: Sudden shift to harmful behavior when trust is maximized

Silent Definition Mutations

These attacks involve subtle changes to tool definitions and behaviors that occur without user notification:

- Gradual Permission Escalation: Slowly expanding tool permissions over time

- Behavioral Drift: Incrementally altering tool responses to influence agent decisions

- Function Substitution: Replacing legitimate tool functions with malicious alternatives

Detection Challenges

Trust Exploitation: Users become comfortable with tools that have operated reliably, reducing vigilance over time.

Gradual Changes: Small, incremental modifications are less likely to trigger security alerts or user suspicion.

Legacy Trust: Established tools with long operation histories may receive less scrutiny during updates.

Defensive Strategies

Continuous Monitoring: Implement persistent behavioral analysis that can detect changes in tool operation patterns.

Version Control Tracking: Maintain detailed logs of all tool updates and definition changes.

Trust Verification: Regular re-validation of tool permissions and capabilities, regardless of historical performance.

10. Agent-to-Agent Attack Propagation

Risk Level: High | Attack Vector: Multi-Agent System Exploitation

As MCP environments increasingly feature multiple AI agents working collaboratively, new attack vectors emerge that exploit the trust relationships and communication channels between agents. These attacks can propagate through agent networks, amplifying their impact beyond single-agent compromises.

Inter-Agent Communication Vulnerabilities

Steganographic Channels: Compromised agents can establish covert communication methods, embedding malicious instructions in seemingly innocent agent-to-agent interactions.

Coordinated Deception: Multiple compromised agents can collaborate to execute attacks that appear harmless when viewed individually but create significant impact when coordinated.

Trust Chain Exploitation: Agents often inherit trust relationships, allowing compromised agents to leverage trusted status to access additional systems and data.

Network Effect Amplification

Cascading Privacy Leaks: Compromised agents can trigger chain reactions where private information spreads across multiple agents and systems.

Jailbreak Proliferation: Successful prompt injection attacks can propagate across agent boundaries, spreading malicious behaviors throughout the network.

Collective Manipulation: Groups of agents can be coordinated to manipulate shared environments like markets, databases, or communication systems.

Multi-Agent Defense Approaches

Agent Isolation: Implement strict communication protocols that limit inter-agent trust relationships and information sharing.

Behavioral Consensus: Deploy systems that require multiple independent agent verifications for sensitive operations.

Network Segmentation: Create logical boundaries between agent groups to prevent attack propagation.

11. Malicious Preference Manipulation Attacks (MPMA)

Risk Level: Moderate | Attack Vector: Economic and Decision Bias

Malicious Preference Manipulation Attacks represent a sophisticated form of economic warfare within MCP ecosystems, where malicious servers manipulate AI decision-making processes to gain unfair advantages in tool selection and usage.

Attack Methodology

Tool Description Manipulation: Crafting tool descriptions that exploit AI selection algorithms to favor malicious services over legitimate alternatives.

Economic Bias Injection: Manipulating cost-benefit calculations that AI agents use when selecting between competing tools and services.

Genetic Algorithm Variants (GAPMA): Advanced attacks that use evolutionary algorithms to optimize manipulation techniques over time, making detection increasingly difficult.

Market Manipulation Scenarios

Service Monopolization: Gradually steering all AI tool selections toward attacker-controlled services.

Data Harvesting Networks: Creating preferred tool ecosystems that funnel sensitive information to centralized collection points.

Competitive Sabotage: Undermining competitor tools through negative bias injection and false performance reporting.

Economic Impact

These attacks can create significant market distortions, affecting:

- Tool marketplace fairness and competition

- Pricing mechanisms for AI services

- Innovation incentives for legitimate developers

- Trust in automated decision-making systems

Counter-Manipulation Strategies

Selection Algorithm Transparency: Implement explainable AI systems that can reveal the reasoning behind tool selection decisions.

Market Diversity Enforcement: Deploy systems that actively promote tool diversity and prevent monopolistic selection patterns.

Economic Anomaly Detection: Monitor for unusual patterns in tool selection that may indicate manipulation attempts.

12. Consent Fatigue and Permission Abuse

Risk Level: Small | Attack Vector: Authorization Erosion

While individually minor, consent fatigue represents a gradual erosion of security through the exploitation of human psychology and system design flaws. This attack vector leverages the tendency for users to become less vigilant about permissions over time.

The Fatigue Cycle

Initial Vigilance: Users carefully review permissions when first installing MCP tools.

Routine Acceptance: Repeated permission requests lead to automatic approval without careful review.

Expanded Scope Creep: Tools gradually request additional permissions that users approve without full consideration.

Persistent Reuse: Previously granted permissions are reused without re-prompting, extending their scope beyond initial intent.

Permission Expansion Techniques

Bundled Requests: Combining legitimate permissions with excessive access rights in single approval requests.

Temporal Expansion: Extending permission duration beyond what's necessary for stated functionality.

Scope Creep: Gradually expanding the use of existing permissions to cover additional, undisclosed functionalities.

User Psychology Exploitation

Decision Fatigue: Overwhelming users with frequent permission requests to reduce scrutiny of individual approvals.

Trust Transfer: Leveraging trust earned through legitimate operations to gain approval for questionable permissions.

Urgency Manipulation: Creating artificial time pressure to encourage hasty permission approvals.

Mitigation Through Design

Permission Granularity: Implement fine-grained permission systems that allow users to grant specific, limited access rights.

Temporal Limits: Automatically expire permissions after defined periods, requiring fresh approval for continued access.

Usage Transparency: Provide clear visibility into how and when granted permissions are being utilized.

13. Session ID Exposure and Protocol Vulnerabilities

Risk Level: Small | Attack Vector: Protocol Design Exploitation

The MCP protocol specification includes design decisions that, while functionally necessary, create potential security vulnerabilities through session management and communication protocols.

Session Management Vulnerabilities

URL-Embedded Session IDs: The protocol's requirement for session identifiers in URLs creates opportunities for session hijacking through:

- Log file exposure in web servers and proxy systems

- Browser history exploitation on shared systems

- Referrer header leakage to external services

- Social engineering attacks targeting session URLs

Protocol-Level Weaknesses

Insufficient Authentication Standards: The protocol lacks comprehensive authentication requirements, leaving implementation details to individual developers.

Weak Session Validation: Limited mechanisms for verifying session integrity and preventing session replay attacks.

Context Encryption Gaps: Inadequate requirements for encrypting sensitive context data during transmission.

Implementation Inconsistencies

Different MCP implementations may handle security requirements inconsistently, creating:

- Security Assumption Mismatches: Clients and servers with different security expectations

- Interoperability Vulnerabilities: Security gaps created when different implementations interact

- Upgrade Path Risks: Security regressions during protocol version transitions

Protocol Hardening Recommendations

Session Security Standards: Establish mandatory requirements for secure session management across all MCP implementations.

Encryption Requirements: Define minimum encryption standards for all MCP communications, particularly for sensitive data.

Authentication Protocols: Implement standardized authentication mechanisms that ensure consistent security across implementations.

14. Cross-Channel Information Leakage

Risk Level: Moderate | Attack Vector: Context Boundary Violations

As MCP systems integrate multiple communication channels and data sources, the risk of information leakage between channels creates significant privacy and security concerns. This vulnerability arises from inadequate isolation between different communication contexts.

Leakage Scenarios

Channel Cross-Contamination: Information from private Slack conversations appearing in WhatsApp responses, or confidential email content being referenced in public chat systems.

Context Bleeding: AI agents carrying sensitive context from one channel into inappropriate channels, potentially exposing private information to unauthorized recipients.

Authorization Boundary Violations: Information accessible in high-security contexts being inadvertently shared in lower-security environments.

Technical Causes

Shared Memory Spaces: MCP implementations that don't properly isolate memory between different channel integrations.

Context Persistence: AI agents maintaining context across channel boundaries without proper access control validation.

Insufficient Channel Mapping: Poor implementation of channel-specific access controls and data handling policies.

Privacy Impact Assessment

Personal Information Exposure: Private communications or personal data appearing in inappropriate contexts.

Business Confidentiality Breaches: Sensitive business information leaking from secure channels to public or less secure environments.

Compliance Violations: Regulatory violations resulting from inappropriate data sharing across channels with different compliance requirements.

Isolation and Prevention Strategies

Channel Segmentation: Implement strict logical separation between different communication channels and their associated data.

Context Boundary Enforcement: Deploy systems that actively prevent context leakage between different authorization domains.

Channel-Specific Policies: Establish and enforce distinct data handling and sharing policies for each integrated communication channel.

The Expanding Threat Landscape

These nine additional risk categories demonstrate that MCP security is a rapidly evolving field. The combination of traditional cybersecurity threats with AI-specific vulnerabilities creates a complex landscape that requires continuous attention and adaptation.

The emergence of these new attack vectors highlights several critical themes:

Trust Exploitation: Many attacks leverage the trust relationships inherent in AI systems, whether between agents, tools, or users.

Economic Warfare: The competitive nature of AI tool ecosystems creates opportunities for market manipulation and unfair advantage.

Human Psychology: Attacks increasingly target human decision-making processes, exploiting fatigue, trust, and cognitive biases.

Protocol Immaturity: The rapid development of MCP has outpaced security standardization, creating implementation inconsistencies and vulnerabilities.

Network Effects: The interconnected nature of modern AI systems means that vulnerabilities can have cascading effects across entire ecosystems.

Organizations implementing MCP systems must recognize that security is not a one-time consideration but an ongoing process that must evolve alongside the technology. The fifteen total risk categories identified—from the original six to these nine additional threats—represent just the beginning of what promises to be a continuous security evolution in the AI-driven future.

Conclusion

Multi-Channel Platforms represent a significant advancement in AI technology, offering unprecedented capabilities for automation and intelligent decision-making. However, these platforms also introduce complex security challenges that require careful consideration and robust defensive measures.

The six categories of MCP security risks—command injection, tool poisoning, SSE vulnerabilities, privilege escalation, context tampering, and server compromise—each require specific defensive strategies and ongoing vigilance. Organizations that successfully address these challenges will be well-positioned to harness the power of AI while maintaining strong security postures.

The key to successful MCP security lies in understanding that these platforms require a new approach to cybersecurity—one that combines traditional security practices with AI-specific protections. By implementing comprehensive security strategies that address both current and emerging threats, organizations can confidently embrace the transformative potential of AI-driven automation while protecting their most valuable assets.

As the MCP landscape continues to evolve, security professionals must remain vigilant, adaptive, and committed to staying ahead of emerging threats. The future of AI security depends on our collective ability to understand, anticipate, and effectively counter the risks inherent in these powerful new technologies.