AI Security Testing: Machine Learning Model Assessment and Protection

As artificial intelligence becomes integral to industries from healthcare to finance, securing machine learning (ML) models against evolving threats is critical. This article explores methodologies for assessing vulnerabilities, protecting models, and implementing robust security practices.

AI Security Fundamentals

AI security focuses on safeguarding data, models, and infrastructure from threats like adversarial attacks, data breaches, and unauthorized access. Key risks include:

- Data Exposure: Sensitive training data leakage through model inversion or extraction[1][3].

- Adversarial Manipulation: Inputs crafted to deceive models into incorrect predictions[2][6].

- Model Theft: Reverse-engineering proprietary models to replicate functionality[1][15].

Mitigation starts with encryption, access controls, and compliance with standards like GDPR and HIPAA[1][7].

Model Vulnerability Assessment

Vulnerability assessments identify weaknesses in ML pipelines through:

| Assessment Type | Focus | Tools/Methods |

|---|---|---|

| Static Analysis | Code, dependencies, and configurations | SAST tools, dependency scanners[4][10] |

| Dynamic Testing | Runtime behavior and API interactions | DAST tools, API fuzzing[6][8] |

| Adversarial Simulation | Resilience against malicious inputs | Red teaming, adversarial libraries[2][6] |

Platforms like AppSOC and Robust Intelligence automate scanning for vulnerabilities such as toxic outputs, prompt injections, and insecure dependencies[2][6].

Data Poisoning Detection

Data poisoning involves injecting malicious samples into training data to corrupt model behavior. Detection strategies include:

- Anomaly Detection: Identifying statistical outliers in training datasets[11].

- Data Sanitization: Removing suspicious samples pre-training[15].

- Model Monitoring: Tracking performance drifts indicative of tampering[6].

For example, a healthcare AI misdiagnosing patients due to poisoned data underscores the need for rigorous validation[11].

Model Protection Techniques

Protecting ML models involves multiple layers:

- Encryption: Securing data in transit and at rest using AES-256 or homomorphic encryption[3][15].

- Differential Privacy: Adding noise to training data to prevent leakage of individual records[1][6].

- Model Obfuscation: Techniques like watermarking or code minification to deter reverse-engineering[15].

- Access Controls: Role-based permissions for model access and API endpoints[3][7].

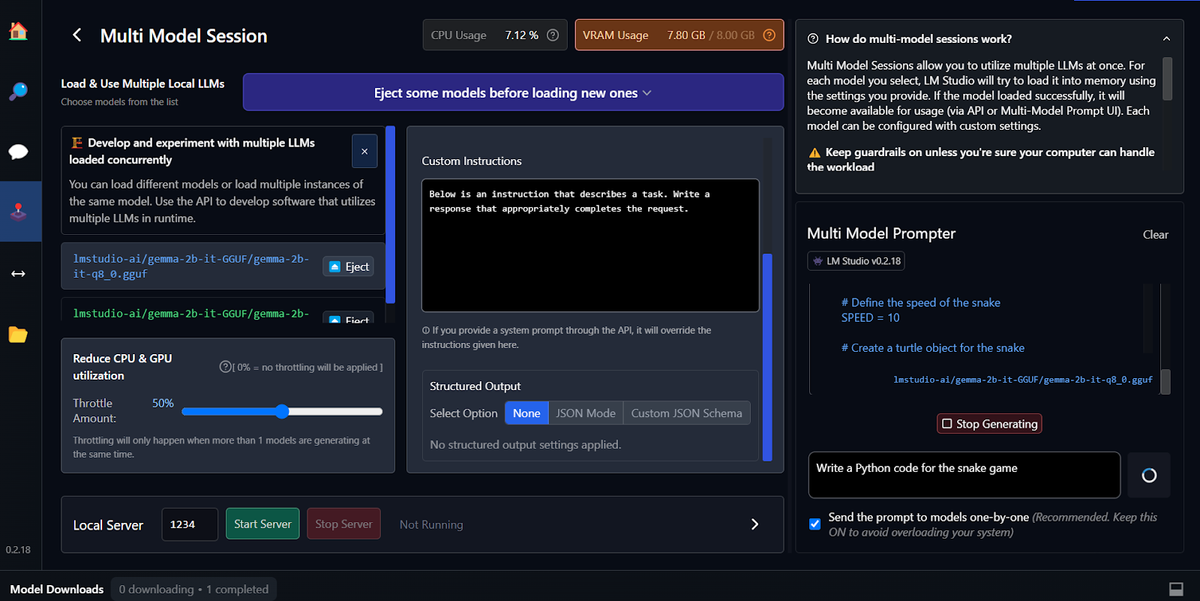

Adversarial Testing

Adversarial testing simulates real-world attacks to evaluate model robustness:

- Red Teaming: Automated tools like IBM Adversarial Robustness Toolbox generate adversarial examples (e.g., perturbed images)[5][6].

- Defense Mechanisms:

- Adversarial Training: Exposing models to adversarial samples during training[1][6].

- Input Validation: Sanitizing inputs via regex filters or ML-based anomaly detection[7][8].

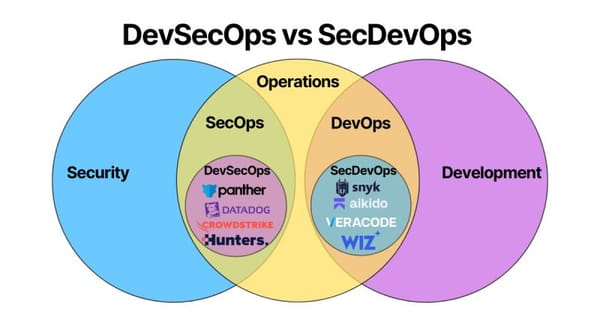

Security Monitoring for AI

Continuous monitoring frameworks detect anomalies and enforce policies:

- Real-Time Alerts: Flagging suspicious activities like abnormal API traffic[7].

- Model Cards: Documenting performance metrics, vulnerabilities, and compliance status[6].

- Integration with SIEM: Correlating AI risks with broader IT security events[7].

Tools like Wiz’s AI Security Posture Management provide dashboards for centralized oversight[7].

Compliance and Ethics

Regulatory adherence and ethical AI practices include:

- Standards Alignment: NIST AI RMF, OWASP LLM Top 10, and MITRE ATLAS frameworks[7][9].

- Bias Mitigation: Auditing models for discriminatory patterns using fairness metrics[6][9].

- Transparency: Disclosing model limitations and data sources to stakeholders[9][15].

Future Security Challenges

Emerging threats demand proactive measures:

- Quantum Attacks: Breaking encryption protocols securing AI models[15].

- AI-Generated Deepfakes: Detecting synthetic media used in social engineering[6].

- Supply Chain Risks: Vulnerabilities in third-party AI libraries and APIs[2][7].

Collaborative efforts like the Coalition for Secure AI aim to standardize defenses[7].

Securing machine learning models requires a multi-layered approach combining vulnerability assessments, adversarial testing, and continuous monitoring. By adopting frameworks like NIST AI RMF and tools such as AI Validation platforms, organizations can mitigate risks while fostering innovation. As AI evolves, staying ahead of threats will hinge on collaboration, transparency, and adaptive security practices.

Adversarial testing represents a paradigm shift in cybersecurity evaluation by simulating real-world attack scenarios, contrasting sharply with traditional security testing's focus on vulnerability identification. Here's how they differ across key dimensions:

Core Objectives

- Adversarial Testing: Validates defenses against realistic attack chains (TTPs) to identify gaps in detection, response, and resilience158.

- Traditional Testing: Focuses on cataloging known vulnerabilities through automated scans or compliance checklists68.

Methodology Comparison

| Aspect | Adversarial Testing | Traditional Testing |

|---|---|---|

| Emulates attacker behaviors (MITRE ATT&CK) | Scans for CVEs/misconfigurations | |

| Red teaming, breach simulation (BAS), exploit kits | SAST/DAST scanners, compliance tools | |

| End-to-end attack lifecycle (initial access to data exfiltration) | Isolated vulnerability detection | |

| Hybrid (manual + automated attack simulations) | Primarily automated |

Key Differentiators

1. Attack RealismAdversarial testing replicates advanced persistent threats (APTs) using techniques like:

- Credential phishing campaigns mirroring current attacker templates5

- Exploiting chained vulnerabilities (e.g., combining unpatched services with weak IAM policies)4

- Testing security tool efficacy during active breaches (e.g., bypassing EDR solutions)7

Traditional methods often miss these interconnected risks due to siloed vulnerability checks68.2. Defense ValidationAdversarial approaches test both preventive controls and operational capabilities:

- Mean Time to Detect (MTTD) during simulated ransomware deployment

- Security team response effectiveness to forged OAuth token attacks

- Logging completeness for forensic analysis47

Traditional assessments rarely evaluate these operational metrics8.3. Risk PrioritizationAdversarial testing contextualizes vulnerabilities by demonstrating:

- Which SQLi flaws are actually exploitable given network segmentation

- Whether exposed API keys enable lateral movement

- Business impact of compromised CI/CD pipelines57

Traditional reports often lack this exploitability context, leading to misprioritized remediation6.

Implementation Models

Adversarial Frameworks

- Breach & Attack Simulation (BAS): Automated platforms like Picus Security that continuously test defenses against updated TTPs8

- Adversarial Controls Testing (ACT): Offensive exercises validating email/endpoint security against credential harvesting & malware5

- Purple Teaming: Collaborative exercises where red teams attack while blue teams refine detection rules in real time14

Traditional Models

- Vulnerability scanning (Nessus, Qualys)

- Static code analysis (Checkmarx, SonarQube)

- Compliance audits (PCI DSS, HIPAA checklists)

Outcome Comparison

| Metric | Adversarial Testing | Traditional Testing |

|---|---|---|

| 94% of emulated ransomware campaigns8 | 68% of CVEs in NVD database6 | |

| <5% (validated exploits) | 25-40% (uncontextualized alerts) | |

| Measures response efficacy | No operational insights |

When to Use Each Approach

- Adversarial Testing:

- Traditional Testing:

Leading organizations combine both: 78% of enterprises now use adversarial testing to supplement traditional scans, reducing breach impact by 41%8. By stress-testing defenses against evolving TTPs, adversarial methods provide the missing link between vulnerability existence and actual business risk.

Citations:

[1] https://www.tonex.com/training-courses/certified-ai-security-fundamentals-caisf/

[2] https://www.appsoc.com/blog/ai-security-testing---ensuring-model-and-system-integrity

[3] https://gca.isa.org/blog/how-to-secure-machine-learning-data

[4] https://www.indusface.com/blog/explore-vulnerability-assessment-types-and-methodology/

[5] https://www.linkedin.com/advice/1/what-best-way-test-machine-learning-model-security-8q0vf

[6] https://www.robustintelligence.com/platform/ai-validation

[7] https://www.wiz.io/academy/ai-security

[8] https://www.cobalt.io/blog/introduction-to-ai-penetration-testing

[9] https://www.ncsc.gov.uk/files/Principles-for-the-security-of-machine-learning.pdf

[10] https://www.getastra.com/blog/security-audit/vulnerability-assessment-methodology/

[11] https://www.blackduck.com/blog/how-to-safeguard-your-ai-ecosystem.html

[12] https://www.cisa.gov/resources-tools/resources/pilot-artificial-intelligence-enabled-vulnerability-detection

[13] https://niccs.cisa.gov/education-training/catalog/tonex-inc/certified-ai-security-fundamentals-caisf

[14] https://learn.microsoft.com/en-us/training/modules/introduction-ai-security-testing/

[15] https://cpl.thalesgroup.com/blog/software-monetization/how-to-protect-your-machine-learning-models

[16] https://www.ninjaone.com/blog/machine-learning-in-cybersecurity/

[17] https://www.udemy.com/course/ai-security-essentials/

[18] https://checkmarx.com/learn/appsec/the-complete-guide-to-ai-application-security-testing/

[19] https://www.proofpoint.com/us/threat-reference/machine-learning

[20] https://learn.microsoft.com/en-us/training/paths/ai-security-fundamentals/

[21] https://owasp.org/www-project-ai-security-and-privacy-guide/

[22] https://www.tanium.com/blog/machine-learning-in-cybersecurity/

[23] https://learn.microsoft.com/en-us/training/modules/fundamentals-ai-security/

[24] https://www.paloaltonetworks.com/cyberpedia/ai-security

[25] https://mixmode.ai/what-is/machine-learning-101-for-cybersecurity/

[26] https://www.academy.attackiq.com/courses/foundations-of-ai-security

[27] https://owaspai.org/docs/ai_security_overview/

[28] https://www.imperva.com/learn/application-security/vulnerability-assessment/

[29] https://www.esecurityplanet.com/networks/vulnerability-assessment-process/

[30] https://learn.microsoft.com/en-us/security/ai-red-team/ai-risk-assessment

[31] https://www.wiz.io/academy/ai-security-tools

[32] https://www.fhi360.org/wp-content/uploads/drupal/documents/Vulnerability Assessment Methods.pdf

[33] https://bishopfox.com/services/ai-ml-security-assessment

[34] https://www.optiv.com/insights/discover/downloads/ai-model-vulnerability-scan

[35] https://www.fortinet.com/resources/cyberglossary/vulnerability-assessment

[36] https://www.linkedin.com/pulse/ml-model-security-frameworks-industry-best-practices-dr-rabi-prasad-xjnrc

[37] https://securityintelligence.com/posts/ai-powered-vulnerability-management/

[38] https://brightsec.com/blog/vulnerability-testing-methods-tools-and-10-best-practices/

[39] https://www.cossacklabs.com/blog/machine-learning-security-ml-model-protection-on-mobile-apps-and-edge-devices/

[40] https://www.ninjaone.com/blog/data-poisoning/

[41] https://www.crowdstrike.com/en-us/cybersecurity-101/cyberattacks/data-poisoning/

[42] https://www.fortect.com/malware-damage/data-poisoning-definition-and-prevention/

[43] https://www.technologyreview.com/2023/10/23/1082189/data-poisoning-artists-fight-generative-ai/

[44] https://www.nature.com/articles/s41598-024-70375-w

[45] https://arxiv.org/html/2204.05986v3

[46] https://outshift.cisco.com/blog/ai-data-poisoning-detect-mitigate

[47] https://www.lakera.ai/blog/training-data-poisoning

[48] https://www.lasso.security/blog/data-poisoning

[49] https://venturebeat.com/ai/how-to-detect-poisoned-data-in-machine-learning-datasets/

[50] https://github.com/JonasGeiping/data-poisoning

[51] https://www.wiz.io/academy/ai-security

[52] https://learn.snyk.io/lesson/model-theft-llm/

[53] https://www.wwt.com/article/introduction-to-ai-model-security

[54] https://www.mathworks.com/help/rtw/ug/create-a-protected-model-using-the-model-block-context-menu.html

[55] https://www.ncsc.gov.uk/collection/machine-learning-principles

[56] https://www.xenonstack.com/blog/generative-ai-models-security

[57] https://www.scworld.com/perspective/five-ways-to-protect-ai-models

[58] https://www.cisco.com/c/en/us/products/security/machine-learning-security.html

[59] https://blogs.nvidia.com/blog/ai-security-steps/

[60] https://www.usenix.org/conference/usenixsecurity21/presentation/sun-zhichuang

[61] https://owasp.org/www-project-machine-learning-security-top-10/

[62] https://cltc.berkeley.edu/aml/

[63] https://www.holisticai.com/glossary/adversarial-testing

[64] https://www.datacamp.com/blog/adversarial-machine-learning

[65] https://developers.google.com/machine-learning/guides/adv-testing

[66] https://developer.ibm.com/tutorials/awb-adversarial-prompting-security-llms

[67] https://www.nccoe.nist.gov/ai/adversarial-machine-learning

[68] https://hiddenlayer.com/innovation-hub/the-tactics-and-techniques-of-adversarial-ml/

[69] https://www.rstreet.org/commentary/safeguarding-ai-a-policymakers-primer-on-adversarial-machine-learning-threats/

[70] https://www.secureworks.com/blog/dangerous-assumptions-why-adversarial-testing-matters

[71] https://neptune.ai/blog/adversarial-machine-learning-defense-strategies

[72] http://adversarial-ml-tutorial.org/adversarial_training/

[73] https://smartsentryai.com

[74] https://www.paloaltonetworks.com/cyberpedia/ai-in-threat-detection

[75] https://www.easyvista.com/blog/why-ai-is-important-in-monitoring/

[76] https://www.hakimo.ai

[77] https://www.cnet.com/home/security/features/i-thought-id-hate-ai-in-home-security-i-couldnt-have-been-more-wrong/

[78] https://protectai.com

[79] https://www.truehomeprotection.com/leveraging-ai-and-machine-learning-for-advanced-intrusion-detection-in-commercial-security-systems/

[80] https://www.sentinelone.com/cybersecurity-101/data-and-ai/ai-threat-detection/

[81] https://www.pentegrasystems.com/ai-powered-security-monitoring-software/

[82] https://www.nist.gov/itl/ai-risk-management-framework

[83] https://docs.aws.amazon.com/whitepapers/latest/aws-caf-for-ai/security-perspective-compliance-and-assurance-of-aiml-systems.html

[84] https://cloudsecurityalliance.org/blog/2024/08/05/the-future-of-cybersecurity-compliance-how-ai-is-leading-the-way

[85] https://www.captechu.edu/blog/ethical-considerations-of-artificial-intelligence

[86] https://securityintelligence.com/articles/navigating-ethics-ai-cybersecurity/

[87] https://www.exin.com/article/ai-compliance-what-it-is-and-why-you-should-care/

[88] https://online.hbs.edu/blog/post/ethical-considerations-of-ai

[89] https://www.americanbar.org/groups/intellectual_property_law/resources/landslide/2024-summer/incorporating-ai-road-map-legal-ethical-compliance/

[90] https://www.compunnel.com/blogs/the-intersection-of-ai-and-data-security-compliance-in-2024/

[91] https://www.americancentury.com/insights/ai-risks-ethics-legal-concerns-cybersecurity-and-environment/

[92] https://www.domo.com/glossary/why-ai-is-important-for-security-and-compliance

[93] https://ovic.vic.gov.au/privacy/resources-for-organisations/artificial-intelligence-and-privacy-issues-and-challenges/

[94] https://www.weforum.org/stories/2025/01/global-cybersecurity-outlook-complex-cyberspace-2025/

[95] https://www.esecurityplanet.com/trends/ai-and-cybersecurity-innovations-and-challenges/

[96] https://oit.utk.edu/security/learning-library/article-archive/ai-machine-learning-risks-in-cybersecurity/

[97] https://www.csoonline.com/article/3800962/2025-cybersecurity-and-ai-predictions.html

[98] https://www.paloaltonetworks.com/blog/sase/the-future-of-ai-security-three-trends-every-executive-should-watch/

[99] https://www.weforum.org/stories/2024/10/cyber-resilience-emerging-technology-ai-cybersecurity/

[100] https://www.forbes.com/councils/forbestechcouncil/2025/01/06/2025-predictions-the-impact-of-ai-on-cybersecurity/

[101] https://www.weforum.org/stories/2024/01/cybersecurity-ai-frontline-artificial-intelligence/

[102] https://www.nttdata.com/global/en/insights/focus/2024/security-risks-of-generative-ai-and-countermeasures

[103] https://www.darkreading.com/vulnerabilities-threats/new-ai-challenges-test-ciso-teams-2025

[104] https://www.forbes.com/sites/garydrenik/2024/08/08/addressing-security-challenges-in-the-expanding-world-of-ai/

[105] https://keepnetlabs.com/blog/generative-ai-security-risks-8-critical-threats-you-should-know

[106] https://www.synack.com/solutions/security-testing-for-ai-and-llms/

[107] https://www.nightfall.ai/ai-security-101/data-poisoning

[108] https://blogs.infosys.com/digital-experience/emerging-technologies/preventing-data-poisoning-in-ai.html

[109] https://hiddenlayer.com/innovation-hub/understanding-ai-data-poisoning/

[110] https://www.ibm.com/think/topics/data-poisoning

[111] https://securing.ai/ai-security/data-poisoning-ml/

[112] https://www.techtarget.com/searchenterpriseai/definition/data-poisoning-AI-poisoning

[113] https://www.sentinelone.com/cybersecurity-101/cybersecurity/data-poisoning/

[114] https://www.wiz.io/academy/data-poisoning

[115] https://www.leewayhertz.com/ai-model-security/

[116] https://cpl.thalesgroup.com/blog/software-monetization/how-to-protect-your-machine-learning-models

[117] https://outshift.cisco.com/blog/ai-system-security-strategies

[118] https://www.excella.com/insights/ml-model-security-preventing-the-6-most-common-attacks

[119] https://www.sentinelone.com/cybersecurity-101/data-and-ai/ai-data-security/

[120] https://www.mend.io/blog/how-do-i-protect-my-ai-model/

[121] https://www.ncsc.gov.uk/files/Principles-for-the-security-of-machine-learning.pdf

[122] https://viso.ai/deep-learning/adversarial-machine-learning/

[123] https://www.paloaltonetworks.com/cyberpedia/what-are-adversarial-attacks-on-AI-Machine-Learning

[124] https://www.ministryoftesting.com/articles/metamorphic-and-adversarial-strategies-for-testing-ai-systems

[125] https://www.leapwork.com/blog/adversarial-testing

[126] https://www.coursera.org/articles/adversarial-machine-learning

[127] https://hiddenlayer.com/innovation-hub/whats-in-the-box/

[128] https://en.wikipedia.org/wiki/Adversarial_machine_learning

[129] https://roundtable.datascience.salon/decoding-adversarial-attacks-types-and-techniques-in-the-age-of-generative-ai

[130] https://sysdig.com/learn-cloud-native/top-8-ai-security-best-practices/

[131] https://strapi.io/blog/best-ai-security-tools

[132] https://www.azoai.com/article/Advancing-Security-Monitoring-with-Artificial-Intelligence.aspx

[133] https://www.galileo.ai/blog/ai-security-best-practices

[134] https://www.jit.io/resources/appsec-tools/continuous-security-monitoring-csm-tools

[135] https://www.agsprotect.com/blog/ai-and-machine-learning-in-security-services

[136] https://perception-point.io/guides/ai-security/ai-security-risks-frameworks-and-best-practices/

[137] https://www.eweek.com/artificial-intelligence/best-ai-security-tools/

[138] https://intervision.com/blog-enhancing-ai-security-and-compliance/

[139] https://www.evolvesecurity.com/blog-posts/ethical-implementation-of-ai-in-cybersecurity

[140] https://www.tevora.com/resource/what-is-ai-compliance/

[141] https://intone.com/ethical-considerations-in-ai-powered-cybersecurity-solutions/

[142] https://www.ispartnersllc.com/blog/ai-compliance/

[143] https://community.trustcloud.ai/docs/grc-launchpad/grc-101/governance/data-privacy-and-ai-ethical-considerations-and-best-practices/

[144] https://www.wiz.io/academy/ai-compliance

[145] https://darktrace.com/blog/why-artificial-intelligence-is-the-future-of-cybersecurity

[146] https://www.malwarebytes.com/cybersecurity/basics/risks-of-ai-in-cyber-security

[147] https://www.welivesecurity.com/en/cybersecurity/cybersecurity-ai-what-2025-have-store/

[148] https://thehackernews.com/2025/02/ai-and-security-new-puzzle-to-figure-out.html

[149] https://www.msspalert.com/news/ai-based-attacks-top-gartners-list-of-emerging-threats-again

[150] https://www.bankinfosecurity.com/secure-ai-in-2025-lessons-we-have-learned-a-27468

[151] https://www.sentinelone.com/cybersecurity-101/data-and-ai/ai-security-risks/

[152] https://perception-point.io/guides/ai-security/top-6-ai-security-risks-and-how-to-defend-your-organization/